Over the last couple of weeks my main focus on the raytracer has been to slowly decrease the processing time it took to render each sphere and overall tracing time. I have gone about this in a couple of ways, Researching data-oriented design, messed with the visual studio settings, started using openMP to pressed the processing and made an octree which all significantly help in optimising this raytracer.

Goals:

- Decrease the processing time from 26 minutes

- Research new methods of optimization

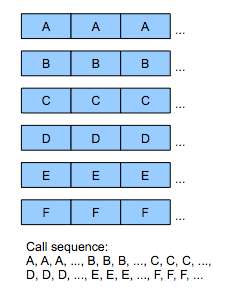

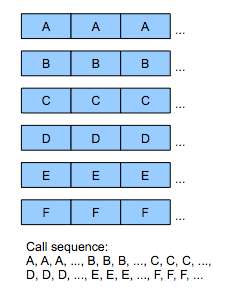

In the first week, I was unable to actually start coding for a time due to software issues with my visual studio and build of the raytracer. I used this time to instead do research into Data-Oriented Design (DOD). In terms of my finding and understanding of the topic now, DOD is about the data itself, what it is, how it is stored in memory and how it read and processed though your code. Instead of focusing on each individual object like you would with OOD, you break those objects down into the individual components and work with those instead along with storing the similar types together in memory.

What this does is allows for easier cache usage as it can pull in large block of similar data and not need to constantly search the memory as it can get what it needs with usually one pull, along with that it normally runs the same code over and over again which is also great cache utilisation. Other advantages involve parallelization and the ability to spread the processing across multiple cores and threads on the CPU and forms of hardware. This means you can have multiple bits of code running simultaneously instead of waiting for certain pieces to finish before others can start.

Data Oriented Design,

For a great blog on the topic, http://gamesfromwithin.com/data-oriented-design, where they talk about the advantages of using DOD over OOD and helped me understand the topic much better with it going over the above topics along with other like modularity and creating small function specific code that clean and easy to understand along with testing and implementation.

In terms of implementing this into the raytracer, that is still being tested on and implemented. I’m not entirely sure how I would go about it, methods I am trying to look for are lists and arrays that store Vector3 values and such, which can then be separated into their own individual lists of X, Y and Z values and instead of pointers, store these values together in memory. I can then use a for loop (a small, cache-friendly function) to loop thought these lists and get the positions of the each pixel. This can go further with adding parallelization to the mix and spreading the process across multiple cores so they can be done simultaneously.

In terms of methods that I have actually used and implemented into the raytracer. OpenMP is one of them, which is used for enabling the parallelization methods. With this I used it on the main loop of the ray tracer to spread it across the 8 cores in the CPU and therefore sped up the processing to roughly six minutes

Code:

// Primary loop through all screen pixels.

#pragma omp parallel for schedule(dynamic, 2)

for (int y = 0; y < windowHeight; y++)

{

//Stuff

}

What this does is, #PRAGMA OMP enables omp into the code, PARALLEL states that it will work on as many cores there are on the CPU and FOR is used to tell the code that the next line is a for loop and should prepare for that. SCHEDULE means it is scheduling an omp loop on the code and DYNAMIC means that it will take work from the top of the priority list whenever it finishes a task instead of waiting.

Another method of optimization I used was changing various settings in the raytracers properties within visual studio. The main change was enabling the “Whole Program Optimization” to Yes which enables cross-module optimization by delaying code generation, this change overall reduced it down a couple of seconds. Other settings I messed with where “Favor Size of Speed” to see the effect of each, where nether of them help, surprisingly, and actually decreased the speed of the raytracer by one to one and half seconds.

One of the largest changes to the code was the implementation of an Octree, Octrees are a form of spacial partitioning used to reduce the number of comparisons needed to determine and process what objects are on the scene by splitting the scene into 8 children nodes that store the ether the min or max angle of a particular area of the scene. This is then used to determine if something renderable is in that section and renderers what is needed allowing for quicker processing times. This is great as less checks are done to determine what was part of the picture and what was background which significantly along with finding the intersect points of the spheres quicker and overall giving a great performance boost to the ray tracer. reducing it down to the now six to seven seconds of processing time.

for (int c = 0; c < 8; c++)

{

for (int s = 0; s < m_contents.size(); s++)

{

if (m_contents[s]->m_position.x - m_contents[s]->m_radius < m_children[c]->maxCorner.x &&

m_contents[s]->m_position.x + m_contents[s]->m_radius > m_children[c]->minCorner.x &&

m_contents[s]->m_position.y - m_contents[s]->m_radius < m_children[c]->maxCorner.y &&

m_contents[s]->m_position.y + m_contents[s]->m_radius > m_children[c]->minCorner.y &&

m_contents[s]->m_position.z - m_contents[s]->m_radius < m_children[c]->maxCorner.z &&

m_contents[s]->m_position.z + m_contents[s]->m_radius > m_children[c]->minCorner.z)

{

m_children[c]->add(m_contents[s]);

}

}

m_children[c]->distribute(md - 1, ms);

}

m_contents.clear();

With the octree one, I did more research into other forms of optimization, however majority of what I read I was unable to actually implement due to my lacking C++ skills. The first one I looked up was SIMD operations or Symmetric Instructions and Multiple Data. This method involved performing the same instruction to multiple pieces of data simultaneously. Allowing for a reduction in processing time with the same data output processed. However there are faults with this system as multiple data sets cannot be processed by multiple instruction simultaneously. However this ray tracer, for the most part, performs the same instruction over and over again so a system like this would be quite handy.

Another method I researched was Inverse Square Root calculations and how that can be used to calculate light and reflection angles like with John Carmacks (I know he didn’t really invent it but it was easier just to say him) example with Quake and how it rendered its lightning and reflection angles with this small piece of code:

float InvSqrt (float x){

float xhalf = 0.5f*x;

int i = *(int*)&x;

i = 0x5f3759df - (i>>1);

x = *(float*)&i;

x = x*(1.5f - xhalf*x*x);

return x;

How this code work is that it uses Newton-Ralphon, which works as an approximation that starts off with a guess and is refined over iterations, slowly knocking off floating point values until it has the approximate int number it needs, the inverse square root of “i”.

To explain more deeply, “i” is the value we want to get the inverse square root of, its an int that has a initial float value. “i” is then set to “0x5f3759df” and minuses by itself, pushing all of it one bit to the right and knocking off ,at least, one of them in the process, halfing itself. This process is repeated until it has its approximate value.

However the issue with using something like this is that it requires a x86 (32) bit value instead of x64 bit number which could cause issues with more modern setups and hardware.

Overall I feel that my ray tracer optimization was a success, there could definitely be more added to it, especially all the other methods I researched but was unable to implement. I will defiantly look more into how I could implement them for the future.

Thanks for Reading.